|

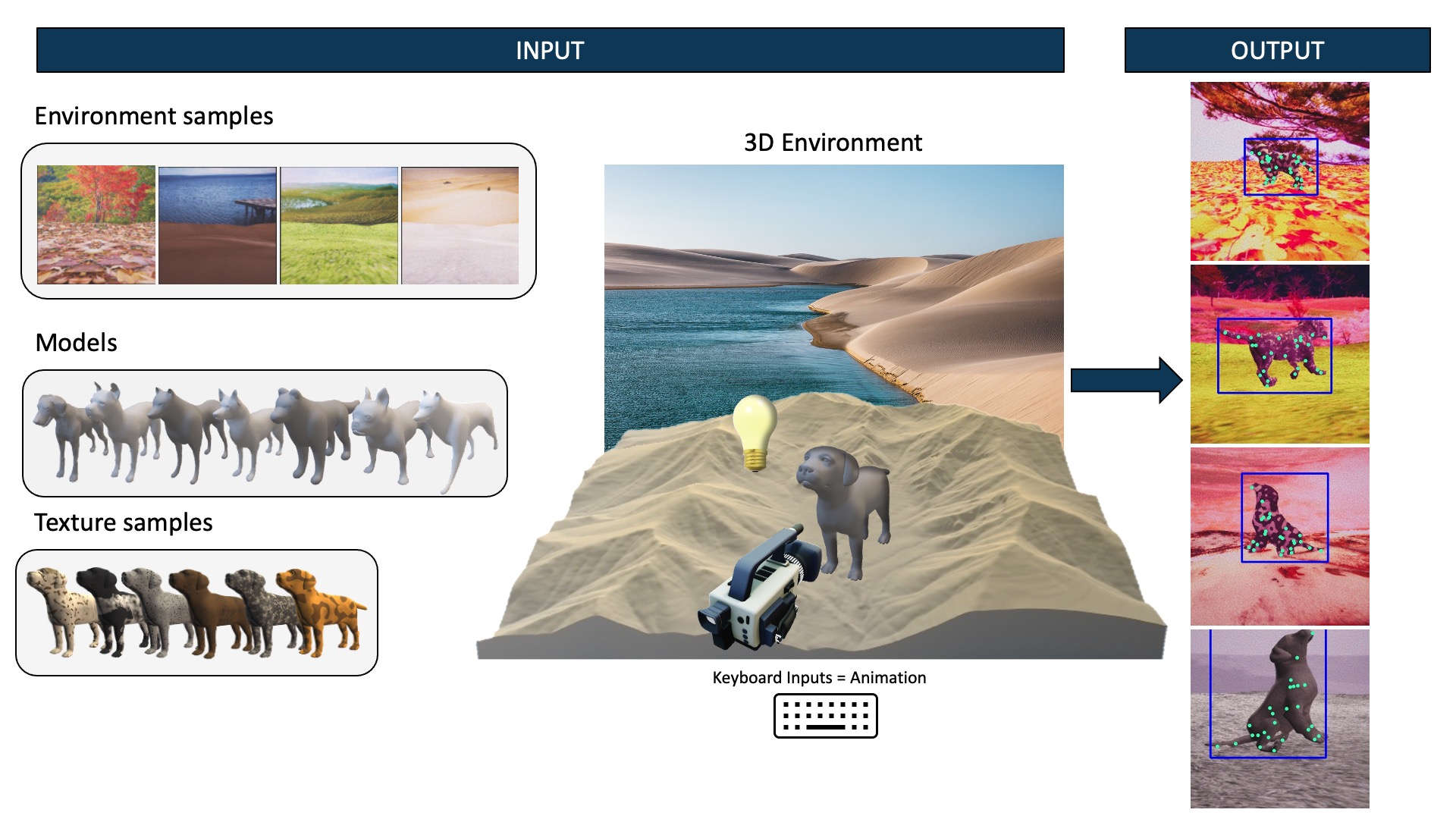

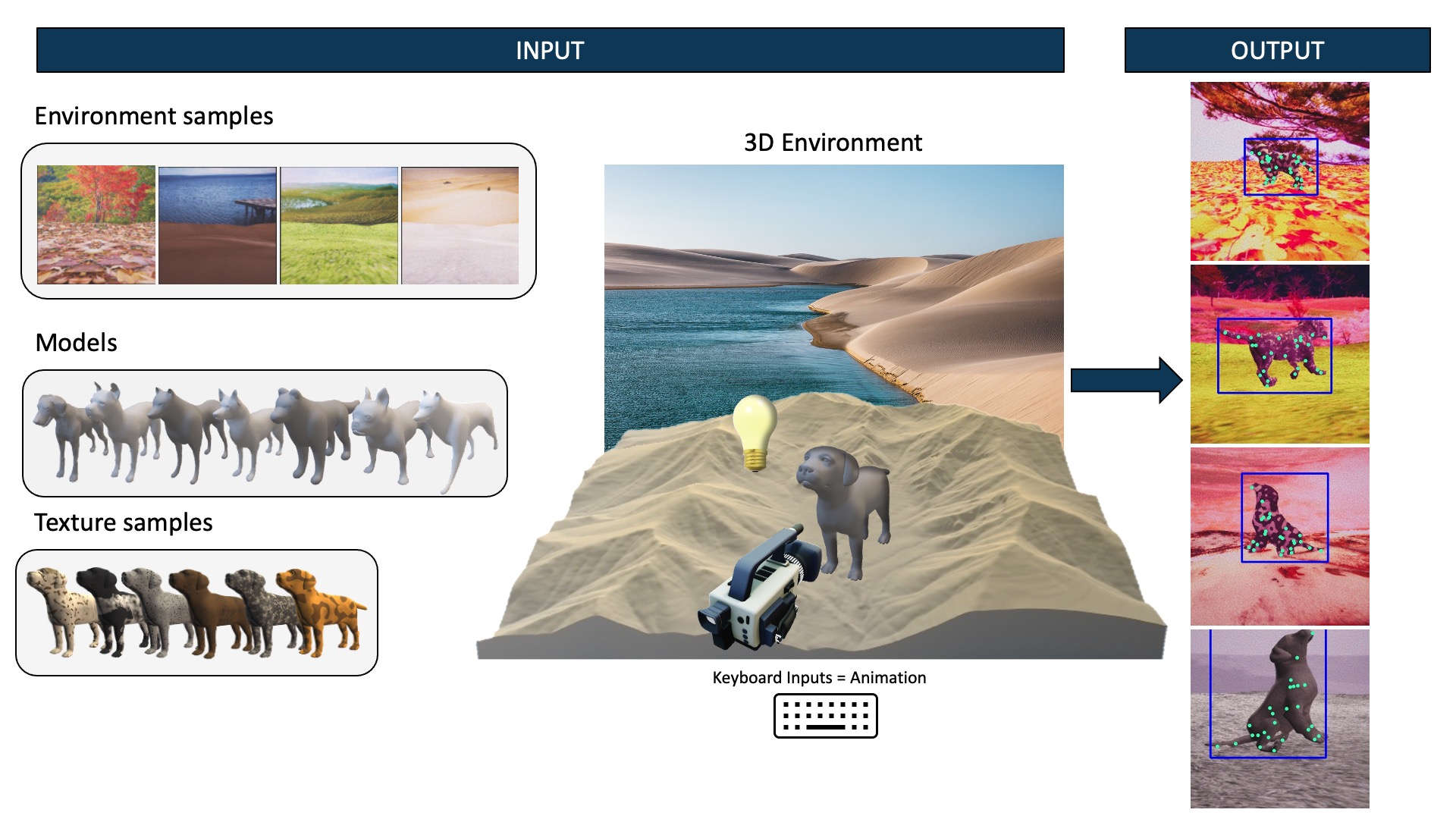

Estimating the pose of animals can facilitate the understanding

of animal motion which is fundamental in

disciplines such as biomechanics, neuroscience, ethology,

robotics and the entertainment industry. Human pose estimation

models have achieved high performance due to the

huge amount of training data available. Achieving the same

results for animal pose estimation is challenging due to the

lack of animal pose datasets. To address this problem we

introduce SyDog: a synthetic dataset of dogs containing

ground truth pose and bounding box coordinates which was

generated using the game engine, Unity. We demonstrate

that pose estimation models trained on SyDog achieve better

performance than models trained purely on real data

and significantly reduce the need for the labour intensive

labelling of images. We release the SyDog dataset as a

training and evaluation benchmark for research in animal

motion.

|